1. var bob = new Person();

2. bob.sayHello();

3. bob.wave();

##################################################################################################################

Potential performance improvement of using Rust on Azure Functions

Entirely Non-Scientific tests showing potential speedups on Matrix Multiplication

##################################################################################################################

4. var cup = new Coffee();

5. bob.drink(cup);

6. var cupTwo = new Coffee();

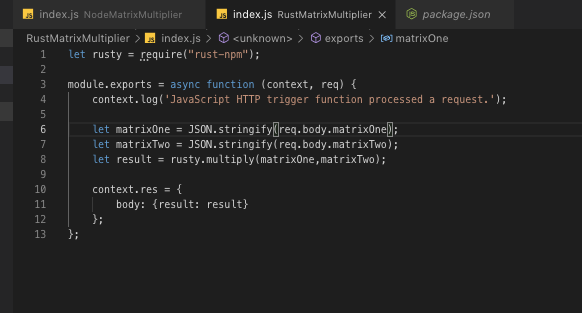

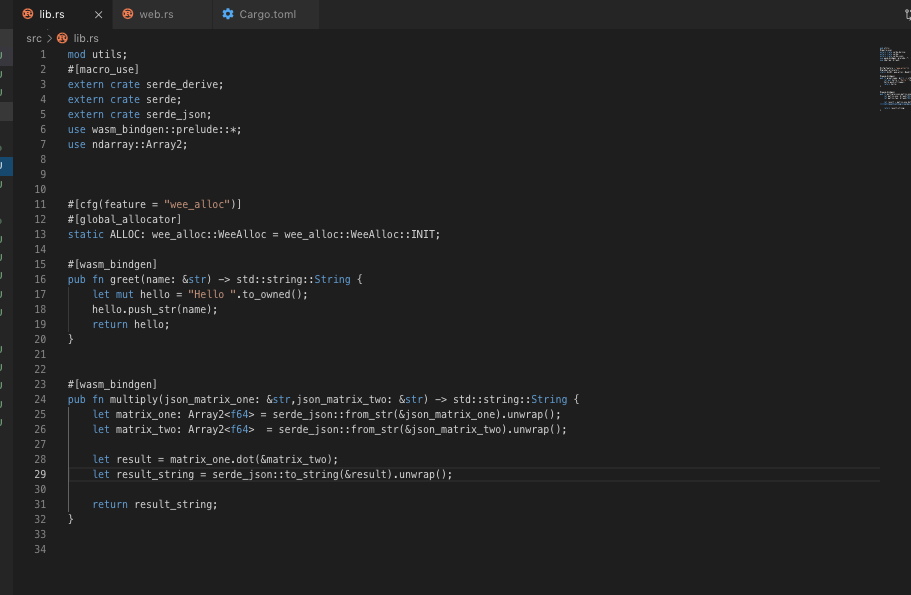

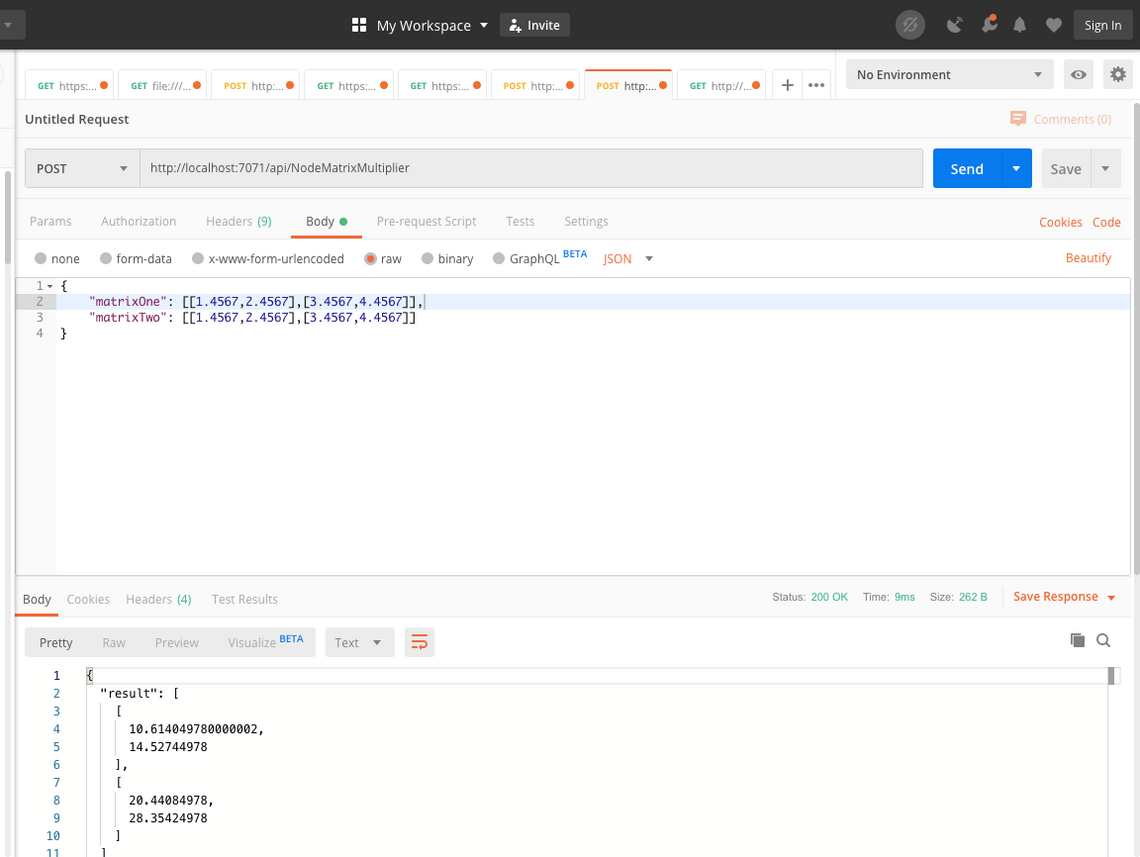

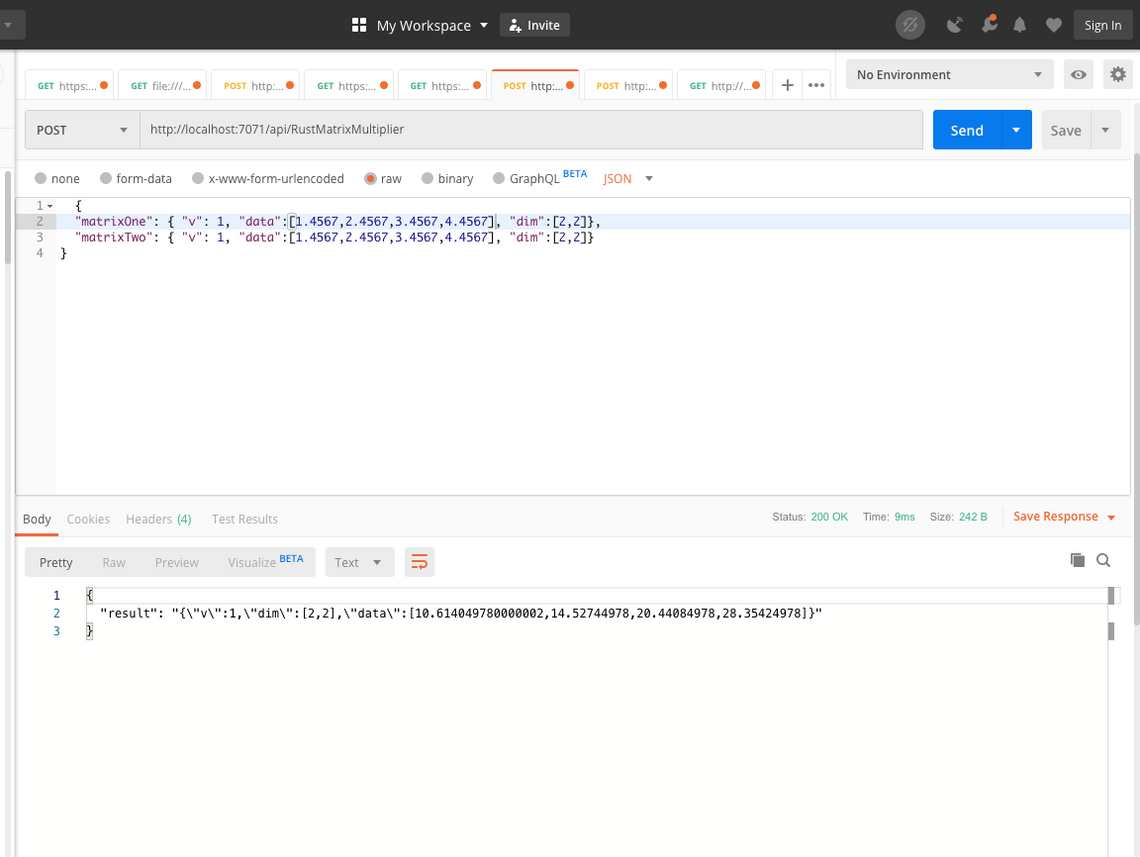

My previous article detailed a proof of concept showing how to use Rust on the fully supported Node.js Azure Functions runtime. This used WebAssembly - by compiling Rust to WebAssembly it was possible to execute it from Node.js. One thing I didn’t mention there is that in principal every language can be compiled to WebAssembly, which means this demo would allow you to bring any language you like to Azure Functions. I’ve done some further work on seeing whether there was any point in this beyond allowing you to use whatever language you like, namely if there were any performance improvements. The test I created was pretty rudimentary, and probably riddled with flaws to developers with much better experience in performance testing, but I hope instructive. This test makes heavy use of libraries - the test is intended to demonstrate differences available to your average application developer. I’m sure advantages could be gained by handcoding the implementations to optimize them, but realistically most app developers aren’t going to do that. I created two functions, both of which implement a matrix multiplication. One of them is done in normal Node.js with the very popular Math.js library. The other is done with a pass through straight to Rust, using the ndarray library. Math.js uses a different format to ndarray by default, and to minimize reformatting cost I just left them to pass as straight through as possible.

Code Examples

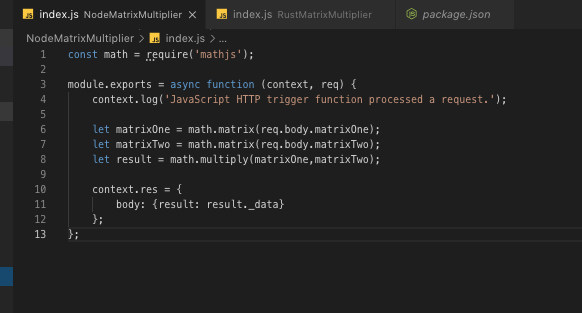

First of all the Node.js function:

Performance Results

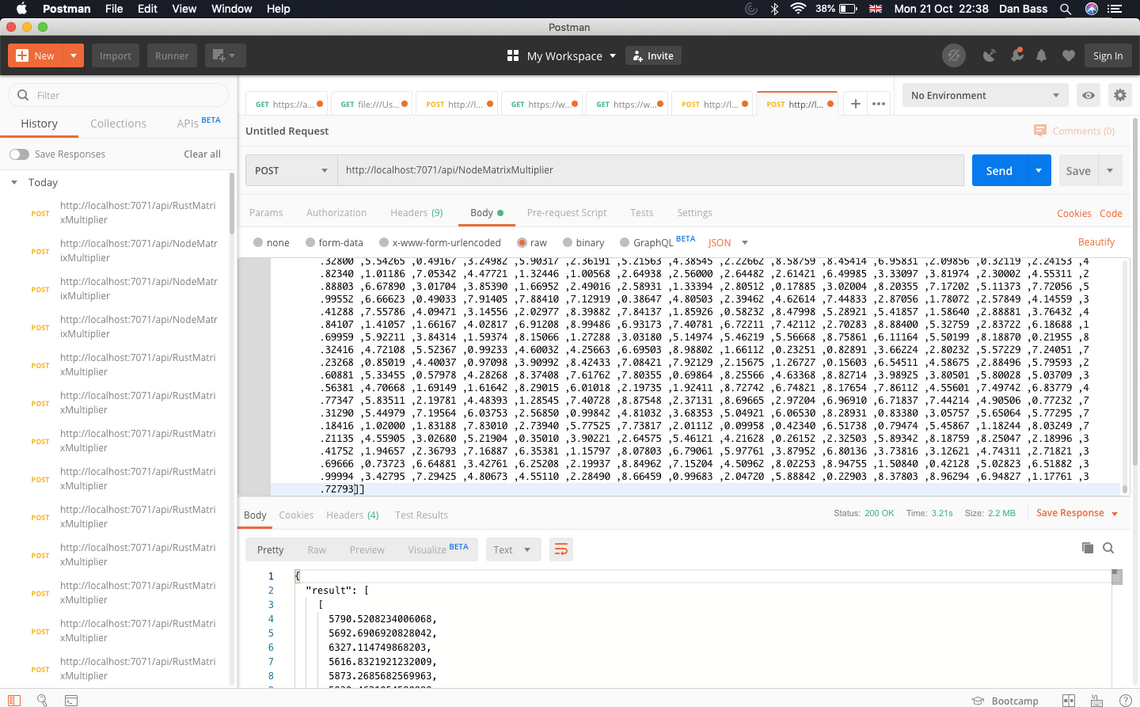

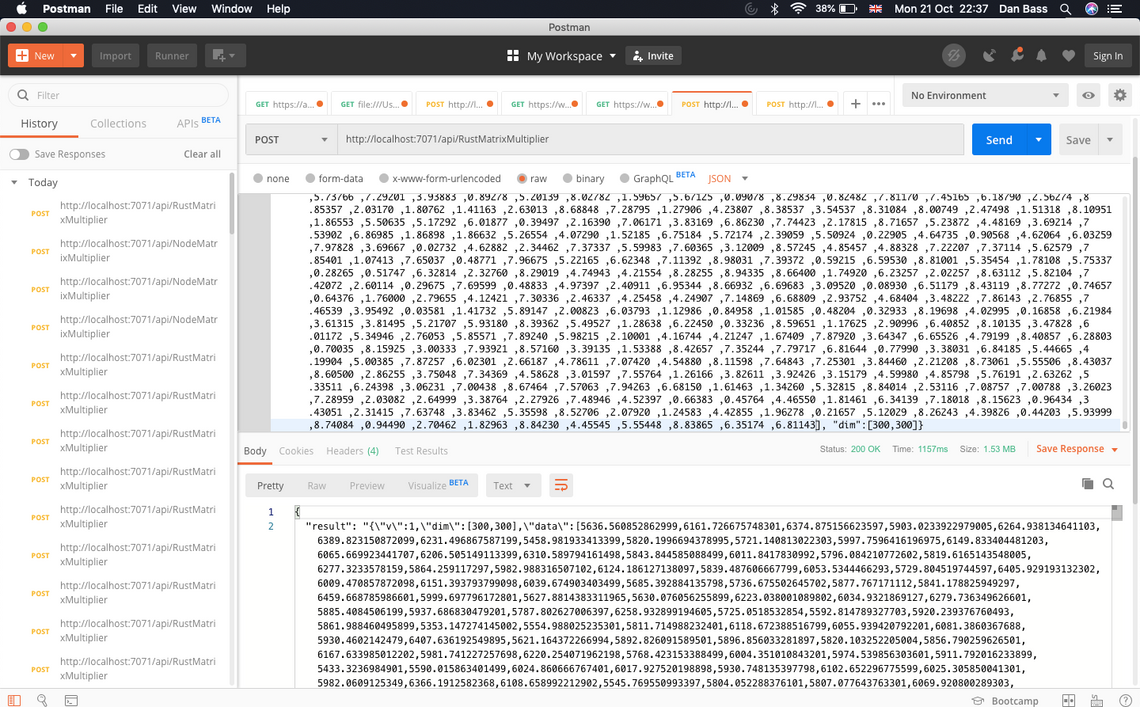

So what are the results of this? As I stated in my previous blog post I’m expecting the performance of the Rust function to be better, albeit with some overhead from calling to a seperate runtime. So maybe something like for a small matrix the Node function would be marginally faster and then Rust would be faster for larger matrices. Firstly the larger matrix, which is a 300 by 300 random dense matrix of decimals. Firsly, the Node.js results:

Next the Rust results:

and Rust results:

Conclusion

Using Rust has a distinct performance improvement over Javascript, even without exploring multithreading. It doesn’t even suffer at smaller payload sizes where you might expect the overhead of using WASM would cause a lag. If you have performance critical Azure Functions which are CPU-bound (i.e they aren’t just waiting for network requests, they are genuinely trying to carry out calculations) then Rust could be a valid way to speed them up.

© 2022 - Built by Daniel Bass