1. var bob = new Person();

2. bob.sayHello();

3. bob.wave();

##################################################################################################################

Resilient Global Serverless Apps with Blazor + Azure

How to build a full stack serverless app that is deployed to the entire world and split traffic between instances depending on where your customer is

##################################################################################################################

4. var cup = new Coffee();

5. bob.drink(cup);

6. var cupTwo = new Coffee();

Previously, I showed you how you can build a serverless CRUD app on Azure using just C#. It has Blazor on the frontend and Azure Functions on the backend. In this article I take that app, and with virtually no code changes at all, deploy its frontend, backend, and database to every single Azure cloud region in the world. This is effectively an implementation of the Geode pattern, with the addition of a globalized Blazor frontend. Take a look at the repository here for the source code.

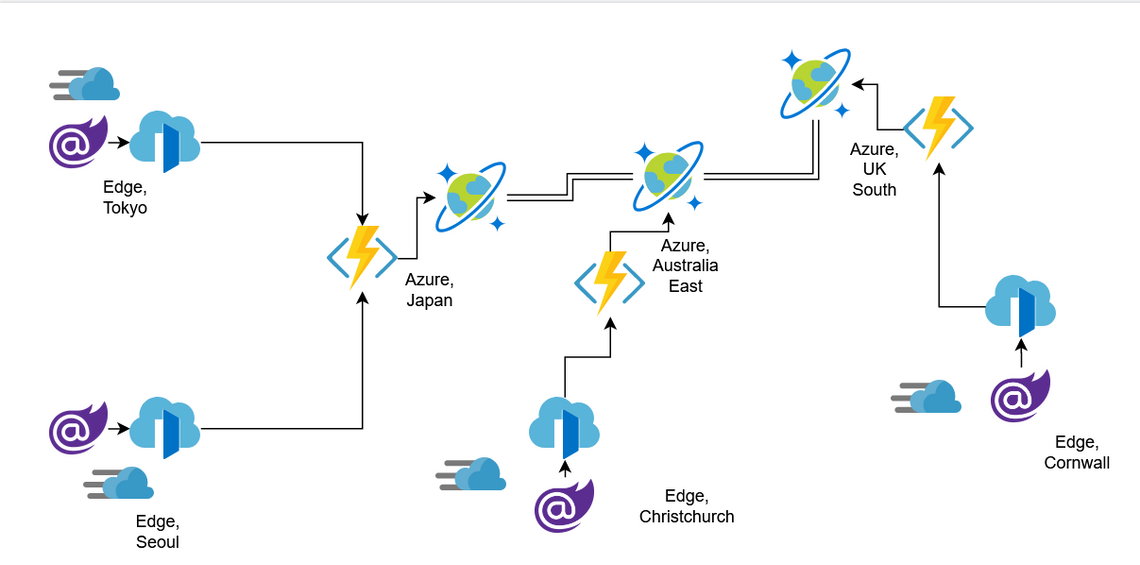

This article is not a comprehensive walkthrough of how to build this. I’ll aim to try and get one written up, but for now this is a more high-level introduction into how it works, with code provided for a fully working and deployed solution that more technical users can go into themselves. Let’s take a look at a diagram:

Steps

Firstly, we deploy the frontend to Azure CDN. This service caches the files making up your frontend on servers around the world for you. This service isn’t unique to Azure, there’s loads of providers - CloudFront for AWS or Cloudflare are particularly popular ones. Because the frontend of our application is just static files, it’s well suited to this service - you can cache them infinitely until you do a release whereupon you purge the cache and repopulate.

Once the users visited your CDN and loaded up your webpage in record time due to it being physically close to them, the Blazor app will try and call the backend, the Azure Function. If the Azure Function was in the UK alone then this would cause problems, and effectively rob you of most of the benefit gained by deploying to the CDN, because the request would have to travel across the world and massively increase latency. So what we can do is deploy an Azure Function to every region in the (Azure) world. But how does the Blazor app know which Azure Function in which region to go to?

Azure Front Door is a service that lets you connect up multiple copies of a backend service and distribute requests between them based on some criteria. If you choose Geo/Lowest latency, then it will forward on any request it gets to what it roughly thinks is the closest and fastest instance of the backend. It also monitors backend health, so if there is a region outage it seamlessly redirects traffic to the closest functioning backend. We use this to distribute requests from our Blazor app to our backend.

Finally, what would the point be of having all of these Azure Functions across the world if they all read and wrote to a single database in the UK? This would again defeat a lot of the point of the global distribution, introducing a cross-world network hop and a single point of failure. Luckily, there is a service called Cosmos DB. It has many benefits, but the ones we’re interested in are the ability to duplicate it across multiple regions across the world and to fully implement the Azure Table Storage API. We can therefore deploy Table Storage API Cosmos DB to each of the regions that has an Azure Function in it, finally creating a full application stack in each region. You can tune the consistency that you want your database to exhibit, from full ACID (which will cause blocks when users edit the same record across regions due to network latency) to eventually consistent. Which one you choose depends on your apps requirements, but basically the closer to eventually consistent you get, the better the globalized performance of your app will be as it will need fewer cross-region locks.

There are some gotchas in Cosmos DB pricing. I signed up to the free tier with the promise of 400 RU/s and global replication. This immediately started charging my account with relatively large sums of money, despite the ‘free’ tier. This is because for each region you replicate too, Cosmos requires a full copy of the amount of compute - so when replicated in 10 regions it was consuming 4000 RU/s, with 400 of those for free. This could do with being highlighted more on the documentation, as it caught me out. If you are planning on using this architecture, I would have to recommend that you either deploy your database to only regions where you have a lot of users, or wait for serverless mode to get released for Cosmos so that you only pay for the RU’s that you use.

What’s the point?

This has several benefits beyond being cool. if you are building a modern app these days you are likely to have users across the world, and usually the users furthest away from wherever you chose your apps Azure region to be suffer from poor latency, hindering your attempts to gain marketshare there. Globally deployed apps also have tremendous resilience - an app deployed like this would require a global Azure outage to go down, something which has never happened for any of the big cloud providers. Microsoft apply software updates a region at a time, with each region in a pair where one gets the update first. This means that not even a software bug should ever cause a global outage. Short of a global nuclear war, this application will keep running. If you’re in an industry where people could die if a service becomes unavailable, this is of utmost importantance (think medical services, military, charity, shipping etc).

It’s also been built in a serverless way, which means zero management. To put this into context, imagine trying to create a globally distributed application based on Virtual Machines and other compute primitives. You’d need to create some sort of distributed load balancer, and make sure that stayed up and healthy whilst deployed to multiple VM clusters across the world. You’d basically have to roll your own everything, down to the networking stack. The amount of maintenance this would take to keep up would easily be 5 Full-Time Employees (FTE) for a simple app, and teams and teams of people for a large, complex app with lots of traffic. This would quickly cost millions in headcount, never mind in hosting.

The serverless approach takes virtually no management. No maintenance, the only thing you need to debug is application level issues generally. There’s no capacity issues to plan for, as everything is autoscaling. The application will automatically heal itself from region outages without your interference.

I think this solution would be a great thing to build into the early product that is Azure Static App Service - all of this stuff is boilerplate that seems straightforward enough to make fade into the background entirely.

There are downsides to this architecture that make it not suitable for everything. If your data has strict data residency controls on it, then you may need to adapt this quite a lot to ensure you stick to those rules. It also has some monthly costs - the Front Door instance costs £15 a month to keep running, so it isn’t entirely serverless. The complexity with the Cosmos DB pricing can also cause the monthly costs to climb, hopefully in the next month or so the serverless tier will be released which will combat this. Finally it is complex, so if there are problems they will be complex problems! Always follow YAGNI (You ain’t gonna need it) when building a solution to avoid this.

In conclusion, I present a fully working solution to deploy a fully serverless C# CRUD application to the entire world, enabling unbeatable levels of availability and resilience, combined with low latency for users across the globe. The ARM template trickery to implement this is quite complex, do feel free to take a look here.

© 2022 - Built by Daniel Bass